Putting the experiment in context

Computers are no longer limited to “idiot savant” roles in scientific laboratories. They are gearing up to becoming research assistants, helping scientists make sense of the vast information and progress in their fields and other fields.

Computers do not drive scientific research. Scientists are the drivers; but computers could become the drivers’ navigation systems…

|

My previous column discussed the growing presence of computers as planners and performers of scientific experiments, able to create hypotheses and devise experiments to test the hypotheses, if only in a limited domain. A prime example of such progress is the “Robot Scientist Project” team, led by principal investigator Professor Ross King of the Department of Computer Science in the University of Wales, Aberystwyth.

This robotic research already contributes to our detailed understanding of the interaction of genes, proteins, and metabolites in yeast, but there is another opportunity for artificial intelligence in scientific research, which may even have a higher potential: helping scientists track and make sense of the vast amount of existing and new information in their own field, not to mention other scientific fields that might be relevant. This challenge is extremely difficult, and it is growing more so every year. It has been a couple of centuries since a scientist could hope to understand and have knowledge of many aspects of science – i.e. from biology to physics and math. As science progressed, this became increasingly hard and today it is all but impossible to have a real knowledge of even one sub-area of science: nobody reads even a sizeable fraction of the papers published about, say, quantum dynamics, even if this is his area. What opportunities are we missing as a result? What new insights, synergies, discoveries, innovations and surprises await us if we could somehow detect similar patterns, discover promising regularities or irregularities to explore, and adopt results or research tools used in one area to boost the progress of another?

One direction that might potentially help out in this challenge is the expression of the contents of scientific papers in a formal language. If this could be achieved, software could automatically compare the contents to descriptions of other research, and highlight opportunities for bringing together the findings from several papers – possibly from quite distant disciplines.

Partial solutions still make good science

|

The idea of finding a common formal way of describing experiments would sound quite familiar to technologists who have become used to specifying all sorts of formal descriptive “languages”; specifying anything from financial transactions to medical records. However, can we really boil down all of a scientific paper to a series of specifications?

The answer is no – certainly not with the current state of the art in artificial intelligence.

But look a bit deeper into this question: the key word in the above question is “all”. If we could translate all of the research into formal specification, there would not be any need for publishing the paper itself – the formalization would say all there is to know about the research, in clear and non-ambiguous terms. Now, relax the requirement from “all” to “some”. This means we have to keep the paper itself, but we can “decorate” it with a series of formal descriptions which partially describe its contents.

What good is partial description? Actually, quite a lot, as described below. This is fortunate, because as things stand today there is no automatic way to generate such descriptions from scientific papers. It remains up to humans to perform this chore, and these people must know their subject very well. Our only hope of seeing this happen is if these people could get something back for this investment.

EXPO and Friends

|

One project attempting exactly this kind of partial formal specification is EXPO. Perhaps not surprisingly, it was developed by members of the same team that developed the Robot Scientist. The Robot Scientist deals with formal representations of facts, hypotheses, research methods, laboratory equipment, experimental designs, and reporting of results. Therefore, it is natural for this project to both consume and produce formalized research.

While EXPO is not the only effort along these lines, it serves as an excellent example of the potential strengths and limitations of this approach.

In a talk given by Professor Larissa Soldatova of The Department of Computer Science at the University of Wales, Aberystwyth, she described the ontology (according to Schulze-Kremer’s definition, “a concise and unambiguous description of what principle entities are relevant to an application domain and the relationship between them”) used by EXPO. This ontology is used, for example, to describe an experiment by answering many questions, including: What is the domain of research? What are the research hypotheses? Which independent and dependent variables are used? How are they set or measured? Which tools and materials are required? How is the experiment performed in each of its steps?

Thus far, this is no different from the methods taught to every scientist when guiding the writing of scientific research reports. The difference is evident when you realize that as far as possible, each question must be answered by making one or more selections from a defined and mutually agreed set of answers. For example, the domain of research may be specified using a familiar classification system, such as the one used by the Library of Congress; the design of the experiment may be selected out of design categories well-known to scientists – e.g. “factorial design”; action goals may be “sterilize”, “weigh”, “measure”; etc. EXPO descriptions are highly detailed. If we focus just on the experimental procedure, we find the requirement to specify for each step of the experiment, types and quantities of materials, duration of each step, required preparations, model and make of the measurement instruments, and potentially much more.

EXPO also includes logic expressions, which may be used in such areas as formalizing the research hypothesis – e.g. “if two species have an ancestor which is not the ancestor of any other intermediate species …”.

Formalization Pays Off

|

To evaluate EXPO outside the Robot Scientist’s domain, professors Soldatova and King randomly selected one issue of Nature journal, and within that issue they selected two papers from maximally distant areas of science: quantum physics and evolutionary biology. They undertook the task of manually encoding both papers into the EXPO “language”. This isn’t simple or fast – when printed out, the description of the physics paper ran to about six pages, and the biology paper weighed in at over twenty. Admittedly, the printed formalization is laid out so that it is far less dense than more familiar scientific publications, with plenty of white space. On the other hand, not all the information in the original papers is encoded within the EXPO description.

However, the effort of explicitly specifying (almost) all the details, and the interaction between the details, paid off: Soldatova and King found several issues with both papers – issues which were brought to light precisely because of EXPO’s requirement for going into explicit detail. Among the issues which were discovered was that the authors of the quantum physics paper failed to justify the implicit weights they assigned to experimental results. For the evolutionary biology paper, no hypotheses are stated, but several hypotheses are implicit in the text. Normally the results of the research should be analyzed in terms of to what extent they support (or contradict) the hypotheses, but for two of the hypotheses the paper does not report any conclusions. It should be clear that there is no intent to criticize the authors of these papers or the editors of Nature (and the criticism stated by Soldatova and King might be challenged by the original papers’ authors) – the point is that even for the highest quality of science, there is much to be gained by this kind of formalization process.

Still, we started out by asking whether computerized processes could help the working scientist in finding relevant information. From this point of view, the abovementioned benefits of manual encoding for a single paper may be a side bonus. The real goal is bringing together information from all across science to validate,cross-correlate, inform, and inspire further research.

Soldatova and King’s paper shows that this goal may be achievable. First, some discrepancies were found when comparing the taxonomic classifications (e.g. phylum, genus, or family) used in the evolutionary biology paper to the most recent taxonomies at the time the paper was written. In the general case (not necessarily in this instance), such discrepancies may occur as a result of the impossibility of tracking all the latest publications. Since taxonomy, like many other areas in science, is highly amenable to formalization (hierarchical trees of categories, in this case), the discrepancies could have been discovered by computers comparing formalizations to the latest updates of the taxonomy. This finding may not seem inspiring, until you consider how many scientific papers may depend on definitions, findings, and theories which were later modified. Researchers referring to these papers may well propagate the old information in their new research, especially if the out-of-date information is not within the specialties of the researchers or the peer reviewers.

Even more interestingly, both studies were found to use the same analysis method, called the “stochastic branching processes”. This unexpected finding is a tantalizing preview of the ability of such widely-separated domains in science to share tools and methods. This discovery of a shared tool, and therefore the opportunity for cross-pollination of ideas and techniques, is quite surprising when you consider that the two papers were selected almost at random.

The Dream …

|

Imagine a scientist setting out to study an intriguing problem in some area – let’s take high-temperature superconductivity for example. Her first step is to write down the experimental plan, even though she’s not yet sure about several crucial details. She doesn’t use a word processor or TeX (the scientists’ favorite typesetting system). Instead, she starts a formal definition of the research problem, the materials, and some of the experimental procedures. The writing turns into a collaboration between her and the computer. She sets the subject and the guidelines, but the computer fills in a lot of the detail, links prior research to her hypotheses and to her experimental methods, suggests the best fit of equipment, duration, material, and temperature for each step, and proposes appropriate statistical and numerical analysis tools.

As she works, an unobtrusive list of relevant research appears at the bottom of her screen. She ignores much of it – she’s highly familiar with her field. However, since she’s planning to use an innovative combination of ceramics and rare earth metals under high pressure, some sea-floor research is also highlighted. Having never dealt with oceanography before, the scientist is surprised to discover that minerals with some of the same characteristics have been found to exhibit unexpected structures which may have some bearing upon her own work. Furthermore, when she’s looking for mathematical simulation tools to predict the structure of the complex molecules she’s considering, she finds that she may be able to adopt tools from the domain of protein folding. Once she starts investigating this, a new suggestion comes up, involving the use of “chaperone proteins” as scaffolding during the manufacturing of these molecules. But can these ideas work under the pressures she’s considering? The software now brings up some recent research on molecular biology in microorganisms living deep under the earth…

The above example probably doesn’t make much scientific sense, but I hope it gives the flavor of how scientific work could be transformed – as if a whole community of scientists, from all disciplines, could be watching your speculations and ideas and contributing their own knowledge.

… and the Reality

Now re-enter reality. What would it take to make this dream happen? Or is it a good dream and not a nightmare, anyway?

|

There are at least two types of obstacles. One has to do with the “language” used for formalizing research descriptions and the search mechanisms which we expect to find the interesting connections between studies. These issues go together to some extent. The better we design the language, the easier it gets to search and compare descriptions written in that language. And yet, the formulation and search are both highly challenging. Like a search engine, we want the real items of interest to pop up in the top ten search results – very few people look beyond the first items. Unlike a search engine, the degree of relevance is not based on text comparison but on similarity of concepts (e.g. “molecular structure” is related to “protein folding”) and to similarity of chains of concepts (e.g. pressure is known to affect speed of certain kinds of molecular reactions; “deep under the earth” is related to higher pressures than conventional laboratory conditions; some microorganisms live in these conditions; microorganisms need molecular reactions to live). Despite efforts such as Cyc, (see also my column “Don’t burn the Cat”) and various Semantic Network initiatives, there is still a long way to go before we can rely on such mechanisms. Still, in this context we don’t require that the search must always be successful; one good suggestion every now and then would be impressive and useful. Let’s just remember that if there are always thousands of bad suggestions, nobody would look at the good one when it finally arrives.

The second type of obstacle is the effort required for the formalization. Scientists have too much to do already, so they need a very good reason in order to learn the new language and to dedicate significant portions of their valuable time writing the descriptions. Can we delegate it to someone else? Maybe, but that “someone else” must be a scientist too, so this direction isn’t very promising, and the problem remains. Even if the software can meet all the expectations described in the above dream, a significant fraction of science must be described to the software before new and beneficial connections may be drawn between remote scientific paradigms. In other words, it’s a lot of work uphill before the great ride downhill.

Can it happen by forcing scientists to work this way, by having leading science journals demand a formalized description of each paper submitted to them? I believe this is quite unlikely. Even ignoring the question of ethics, it doesn’t make sense to demand this until we know it delivers the benefits. Unfortunately, the benefits won’t appear until enough scientists (human or robots – see below) do elect to attach formalized descriptions.

How can we make it easier and faster to write the descriptions? Even with current state of the art technology we don’t know how to write a program that automatically translates a scientific paper into a formal description. However, we can write a program that gives some automated support to the formalization process. It will start with simple software for filling on-screen forms, but with time it will grow to have some helpful hints, based on learning from the way similar passages in other papers have been manually translated.

An even more promising direction is to give scientists software which encourages them to start with writing the formalization before writing the paper. The software will then help in writing the paper itself through automated learning mechanisms and the adaptation of conventional textual structures used in the more routine parts of the paper, such as describing experimental procedures.

Lastly, the introduction of “Robot Scientists” (discussed on my previous column) carries some promise. A human scientist will be more inclined to write formal descriptions if these could be used by a robot to automatically perform the experiment. This could extend well outside experimental science: theoretical physicists set up equations describing some model and using software-assisted symbolic or numeric mathematics to explore the implications of these equations; evolutionary biologists use sophisticated software to compare the genetic codes of various species and construct “cladograms”. These and many other examples could also benefit from automation in the same sense. The other direction of influence is more drastic: in the “Robot Scientist Project” and similar initiatives, the robot designs its own experiments (in a limited scientific domain), automatically executes them, and analyzes the results. It already produces formalized descriptions since they are a perfect match for the way the robot “thinks” – for the robot, the tough part is writing the human-readable paper. If the number of studies performed robotically were to rise significantly, it could form the “critical mass” of formalized research which would be sufficient to deliver the benefits of searching and correlating within that mass. Once the critical mass and its benefits are achieved, we can imagine a very fast conversion of all new research to a combination of the formal description accompanying the free-text, human-friendly form. We can even imagine that at least for some research, the free-text form will diminish, maybe shrinking down into the abstract or disappearing altogether.

A Map of Science

|

Richard Feynman, the famous American physicist, was educating himself about feline anatomy at one point in his eccentric career. Wanting to see an anatomical chart, he asked a flustered librarian “Do you have a map of the cat?”

This story not only shows how scientists from different disciplines may find even the basic terminologies incompatible, but it also begs the question: “Do we have a map of science?” If we did, we could use it to discover “the shape of the land”, guide our explorations, and hint where we might find fertile unexplored territory.

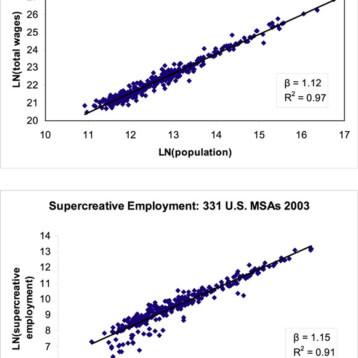

There is a simple way to gauge the degree of proximity or distance between any two scientific disciplines. Given papers concerned with discipline A, and papers concerned with discipline B, we could say that A is well-connected to B if papers from A often refer to papers from B (to be precise, this definition implies that the distance of A from B may not be the same as the distance from B to A, but we’ll ignore this here). It is usually quite easy to know which discipline any paper belongs to, via the journal where the paper appeared in as well as via the keywords used in classifying papers.

The network created by drawing a dot for each paper, and drawing a link between point A and point B if paper A references paper B, has been studied intensively. Among other things, it has been used for name disambiguation – figuring out when a single author may use slightly different versions of his name (e.g. “Jane Smith” vs. “J. H. Smith”) or when several authors have the same name. In the second case, if we find some papers by “Jane Smith” to be about plasma physics and others to be about anthropology, it’s a good guess that they are not written by the same person.

The same network has also been used to draw maps. The idea is to place all disciplines on the map so that the nearer any two disciplines are, the more cross-references we may expect to find between papers in these two disciplines. This isn’t easy to do, since we need to respect the distances between all pairs of disciplines. One way of solving this problem takes the form of solving an optimization problem. We set up the network of dots and links as described above, and assign an attractive power for each link. Thus, if there are many links between two points, there will be a strong attraction between them. To avoid having all the dots attract each other into one point, we also introduce a force that repels points from each other. Now we can define a “total energy” measure for the network, so that the attractive forces cause energy to drop when two points attracting each other come closer together, while the repelling forces do the opposite. Now the challenge is to adjust distances so that the network settles at a low energy. This is a job for a fast computer, and it typically involves artificial intelligence techniques such as genetic algorithms as shown in some recent work. In practice, it may be very difficult to find the minimum energy, and there may be many different configurations with just about the same energy, so we can’t claim we have the “one true map”. Moreover, with the breakneck speed in which new scientific results are published, yesterday’s map won’t be the same as today’s. Still, the maps resulting from such processes make enough intuitive sense to be acceptable, while including enough unexpected relationships to justify the effort.

|

One map of science is available at mapofscience.com. The same data has also been depicted differently. Both maps are not only visually attractive, as good maps must be, but they are also thought-provoking; consider observations such as “At Physics, science branches in two directions. The northern branch is Chemistry. The southern branch is a variety of engineering disciplines, connecting with the Earth Sciences and Biology. Biotechnology appears where Chemistry and Biology reconnect. This forms the beginning of Infectious Diseases and medical Specialties” (this text is adapted from the “mapofscience” site).

There is an important difference between the ontological linking of scientific paradigms exemplified by EXPO and the reference-based linking used in this map of science. EXPO can discover links which scientists aren’t aware of. Reference-based linking uses references provided by the scientists authoring each study. EXPO gains this advantage because its “understanding” of the research goes far deeper than the shallow information provided by just following the net of references. Still, considering the extra effort needed for EXPO, there’s a lot to be said for the shallow “understanding” of reference.

In this way, the exploration of bibliography links may be seen as a limited-capability but easily-achievable alternative to the approach exemplified by EXPO. While it can only follow the footsteps of human scientists, and while it does not understand why they made each step (does the fact that paper B reference paper A show a crucial reliance on its results, or a clarification of how B’s methods are very different from A’s, or a side comment on potential applications of the ideas in A?), it can achieve “pseudo-originality” by combining non-original footsteps of several persons into a foot path, so that no single scientist ever walked along the whole path. We can even imagine the exploration of bibliography links existing side-by-side with EXPO, providing some of the scaffolding that EXPO will need in its early years, before it’s table and powerful enough on its own.

We can imagine the computer which constructed the “map of science” as a research assistant who is slow and uncreative as far as “real science” is concerned, but who can do some things much better than any of its human masters, alerting them to nearby points of interest and helping them navigate through the dense roads of modern science. To use another analogy, it’s up to human scientists to decide where they want to drive and with what purpose, while they’re supported by the “science map” computer, who plays the role of their GPS-based navigation system.

About the author: Israel Beniaminy has a Bachelor’s degree in Physics and Computer Science, and a Graduate degree in Computer Science. He develops advanced optimization techniques at ClickSoftware technologies, and has published academic papers on numerical analysis, approximation algorithms, and artificial intelligence, as well as articles on fault isolation, service management, and optimization in industry magazines.

Acknowledgement: Some of this text has previously appeared, in different form, in articles by this author which were published in Galileo, the Israeli Magazine of Science and Thought, and appears here with the kind permission of Galileo Magazine.