|

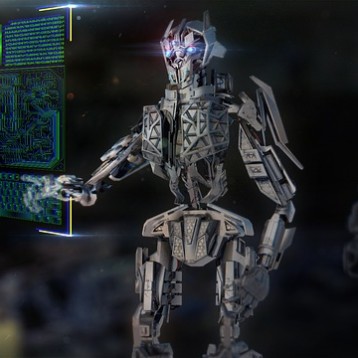

“As far as we know, no other research group has used machine learning to teach a robot to make realistic facial expressions,” said Tingfan Wu, a computer science Ph.D. student from the UC San Diego Jacobs School of Engineering.

The Einstein robot head has around 30 facial muscles that are manipulated using tiny servo motors connected to the muscles by strings. Each facial movement is manually programmed to activate selected servos to develop the right looking expression.

Developmental psychologists hypothesize that toddlers discover how to control their bodies via systematic exploratory movements. Originally, these actions seem to be performed in a haphazard style as infants find out how to control their bodies and get hold of objects. “We applied this same idea to the problem of a robot learning to make realistic facial expressions,” said Javier Movellan, senior author on the paper.

The robot begins the learning process by twisting its head in all directions, a technique called ‘body babbling’. The robot then views itself in a mirror and analyses its own expressions using facial expression detection software called CERT (Computer Expression Recognition Toolbox). This program provides the data required for machine learning algorithms to study mapping between facial expressions and the movements of muscle motors.

“During the experiment, one of the servos burned out due to misconfiguration. We therefore ran the experiment without that servo. We discovered that the model learned to automatically compensate for the missing servo by activating a combination of nearby servos” – said Movellan.

|

The team is currently working on a more precise facial expression generation model and a technique that will efficiently enhance the model space. They also revealed that the ‘body babbling’ method used previously was not the most proficient way to discover the facial expressions.

Although the original objective of this project was to develop a robot which could perform facial expressions that he learned on his own, the scientists say that their work has slowly transformed into the study or the psychological aspect of how humans first learn to form facial expressions.

TFOT has previously written about a research attempting to teach computers to recognize objects such as glasses or cars. Another project we covered is the ASIMO, in which researchers from Carnegie Mellon University tried to humanize the ASIMO robot. You are also welcome to check out our coverage of the European Feelix Growing project, in which scientists have developed an emotional robot that has empathy and can recognize human emotions.

Additional information on the Einstein Robot can be obtained at the University of California website. You can also view the robot making faces in this short YouTube video.

Icon image credit: UCSD/Nobel Prize Organization