|

With ordinary interface devices (e.g. keyboard, mouse, touch screen, and ultrasonic pen), the interaction of humans with computers is restricted to a particular device at a certain location within a small area. The challenge that human computer interaction research is facing is the creation of tangible interfaces that will make the human-computer interaction possible via augmented physical surfaces, graspable objects, and ambient media (walls, tabletops, and even air). The idea is to make the interaction natural, and eliminate the need for a hand held device.

The European Union funded the “Tangible Acoustic Interfaces for Computer Human Interaction” (Tai-Chi) Project. The scientists taking part in the project are currently researching acoustics-based remote sensing technologies. The goal of the project is to transfer information pertaining to an interaction by using the structure of the object itself as the transmission channel. By doing so, the need for an overlay or any other intrusive device is suppressed. This new sensing paradigm may prove to have some advantages over other methods of interaction, such as computer vision or speech recognition. New applications could include wall-size touch panels, three-dimensional interfaces, and robust interactive screens for use in harsh environments. This new technology brings the sense of touch into the realm of human-computers interaction.

|

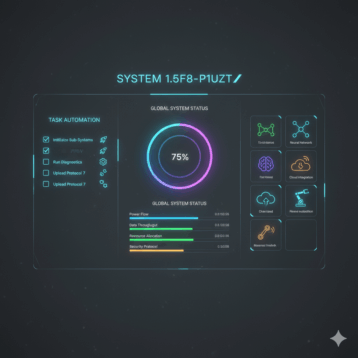

The project’s goal is to develop acoustics-based remote sensing technology, which can be adapted to physicals objects in order to create tangible interfaces. According to the Tai-Chi Project scientists, users will be able to communicate freely with a computer, an interactive system, or the cyber-world by means of an ordinary, every-day object. Different methods for contact point localization are being developed in the framework of the project. Some methods utilize the location-signature embedded in the acoustic wave patterns caused by contact, while other methods are based on triangulation and acoustic holography in order to determine the object’s location in 3D.

The Tai-Chi Project includes the study of acoustic physical properties, advanced theoretical development of localization algorithms, hardware structure design, and demonstrations at public events. The researchers have achieved goals related to different movements of users, such as finger tapping, nail clicking, knocking, and even tracking continuous movement like scratching. The main approaches that have been investigated are time delay of arrival, location pattern matching, and acoustic holography. In-air localization has also been studied and tested using time-delay and Doppler-Shift. The general purpose is tracking a moving acoustic source in the air. Experiments were successfully conducted on different objects including wood boards, window glass, plastic blocks, and metallic sheets.

The Tai-Chi scientists have demonstrated several applications for the new technology, such as a virtual piano (an image of a piano keyboard projected onto a white board, which produces the correct notes when touched), a memory game (the image of the cards is projected on to a plastic sheet), a giant tablet (that enables curve drawing by continuous movement of finger on a large wood board), a musical chair, and an interactive Google Earth map.

TFOT recently reported on a new technology that enables speaking without saying a word. We also covered the development of mind controlled bionic limbs and of the Noahpad UMPC, a miniature laptop computer with a unique interface.

For more information on the Tai-Chi Project, see the dedicated website.