In an epoch defined by technological ingenuity, a novel challenge emerges, shaking the foundations of authenticity. We’ve been in the sphere of deepfakes for years, the phenomenon that has skewed our comprehension of truth. Let’s navigate its implications, dissect its mechanics, and outline strategies to safeguard against the deluge of digital deceit that engulfs us.

Defining Deepfake

Deepfakes refer to convincing synthetic media content generated through advanced artificial intelligence techniques. These media, encompassing videos, audio clips, and images, are crafted to blend fabricated elements with authentic ones.

Unlike most businesses and organizations that use a moving photo effect and other modern technology for marketing purposes, many corporations, often affiliated with political figures, use similar tools to create a deceptive illusion of genuine footage.

At the bottom of deepfakes lies a subversive synergy of machine learning algorithms, data manipulation, and visual effects, allowing content tricks in previously unfathomable ways. Deepfake technology hinges on the prowess of deep learning algorithms, specifically Generative Adversarial Networks (GANs). These networks consist of two neural networks – the generator and the discriminator. The former produces counterfeit content, while the latter tries to distinguish it from real content. GANs constantly refine their artistry, generating remarkably authentic media that can mimic facial expressions and speech patterns.

The origins of deepfakes trace back to playful experiments within academia, but their applications have since snowballed into realms ranging from entertainment to political manipulation.

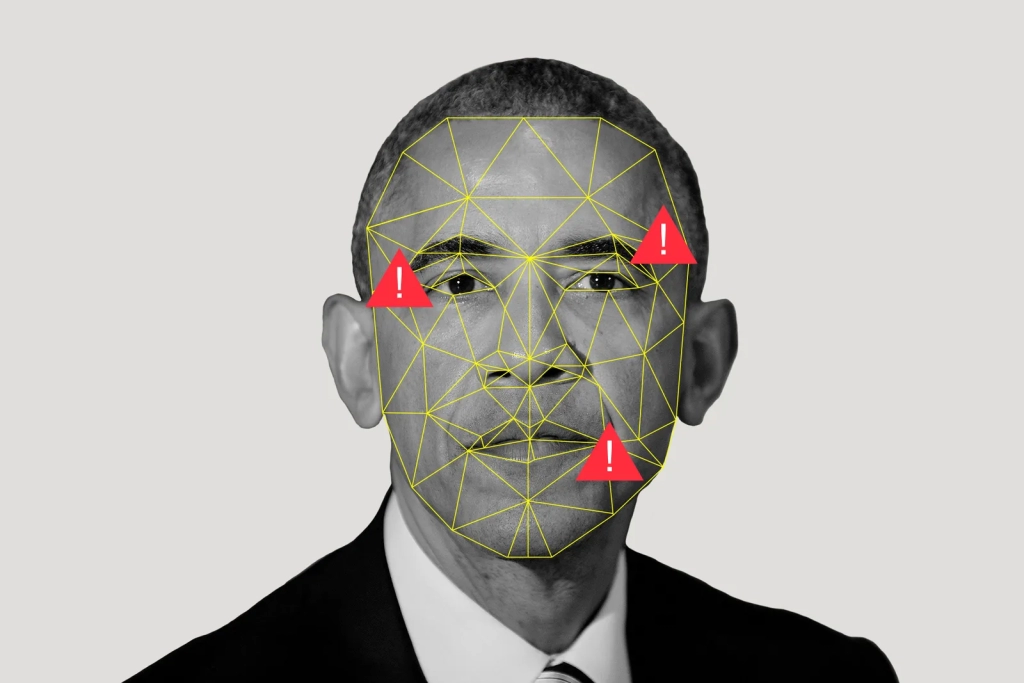

The potential for mischief and manipulation of deepfakes looms large in the political realm. With widespread access to powerful computing and open-source tools, the creation and dissemination of deepfakes have become increasingly accessible.

The Deep Learning Alchemy: Data Transformation and Manipulation

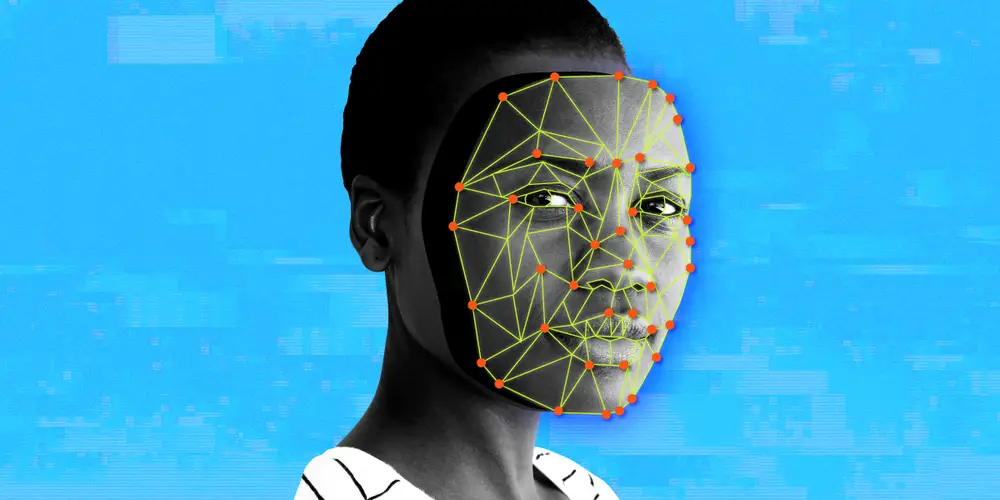

Deepfakes thrive on the availability of abundant data. From celebrity images to public speeches, these deceiving elements fuel the machine-learning algorithms that power deepfake creation. Data manipulation techniques, like facial landmark extraction and attribute manipulation, further enhance the authenticity of the fabricated content, making it challenging to discern from the real thing.

Data Selection and Model Training

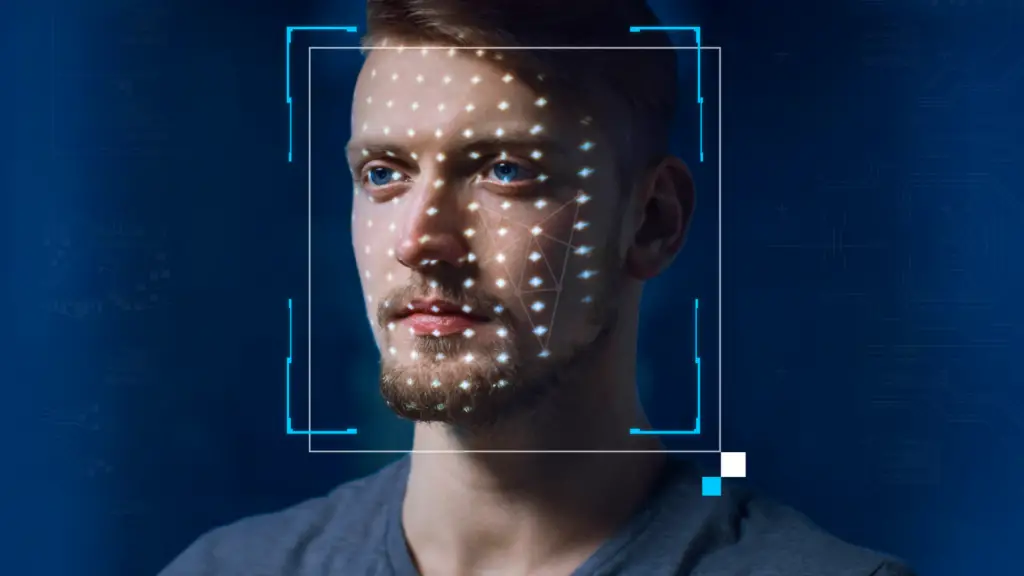

The journey begins with data selection—choosing a target individual whose likeness will be replicated. The selected dataset is then used to train the GANs and generate media that mimic the target’s appearance and behaviors.

During the creation process, facial landmarks and other features are extracted from both the target and source media. These features are then mapped onto the target’s face, ensuring that expressions, movements, and lip-syncing align convincingly with the synthesized content.

The generator and discriminator constantly refine their outputs through numerous training cycles. Meanwhile, the discriminator gauges whether the product mirrors the real content.

Detecting Illusion: Unveiling the Veil of Deception

Even in the AI-generated content world, many telltale signs can still reveal a fake artifice. Imperfections such as mismatched facial features, odd lighting, or unrealistic shadows can indicate manipulation. But as deepfake technology improves, so, too, does its ability to minimize these visual discrepancies. It’s arguably more challenging to distinguish a fake these days than a decade ago.

Audio is another pivotal element in deepfake detection. Misalignments between speech and facial movements can expose a fabricated video. For instance, if the lip movements don’t sync with the words spoken, it may hint at manipulation.

Overall, as deepfakes grow more convincing, the arms race between detection and creation intensifies. AI-powered tools leverage ML to scrutinize videos for subtle signs of manipulation. These systems use algorithms to examine features, movements, and patterns the human eye might overlook, offering an increasingly powerful means of detecting fake content.

Blockchain is one such technology, presenting an innovative solution for fake verification. Timestamping and storing content on a distributed ledger creates a tamper-proof record of authenticity. This method allows experts to confirm whether a video has been doctored since its creation.

The Arsenal of Protection

Advanced authentication mechanisms are constantly developing to validate the authenticity of digital content. From watermarking techniques to embedded metadata, these solutions aim to provide verifiable proof of a video’s origin and integrity.

Moreover, AI-driven tools can spot even the most convincing deepfakes. These utilities leverage machine learning to analyze intricate details, such as microexpressions and inconsistencies, empowering content creators and consumers to identify manipulated media.

Media literacy education is another formidable weapon against the spread of misinformation and psychological operations. By fostering critical thinking skills, individuals can learn to scrutinize media, recognize visual cues, and question information sources. This armament enables people to be more discerning consumers of digital media.

Educational institutions also play a pivotal role in shaping the media-savvy citizens of tomorrow. Integrating digital literacy into curricula equips students with the tools to navigate the ever-evolving digital landscape. We fortify future generations against the onslaught of deepfake misinformation by teaching them to identify and analyze digital manipulations.

Bottom Line

In a world where reality can be reshaped at will, the rise of deepfakes is a call to join forces. The consequences — spreading false information, exploiting people, and weakening trust — shout for a strong response. As we explore deepfake creation and spotting, one thing is clear: we must not let it prevail. Businesses and communities must team up to build strong defenses, teach everyone to judge what they see, and set clear rules.

Even though the landscape of deception keeps changing, our strength comes from sticking to the truth, being open, and preparing for a future where lies find it harder to spread. Challenges are ahead, but with knowledge, tech, and a mindful approach, we can protect our digital world from the growing tide of fake agenda.