Students James Patten and Ben Recht developed the Audiopad in order to allow a new and unique composing experience using existing techniques. Patten and Recht say that one can simultaneously pull sounds from a giant set of samples, cut between drum loops to create new beats, and apply digital processing, all on the same table. In addition, they promise that Audiopad creates a visual and tactile dialogue with the performer and the audience.

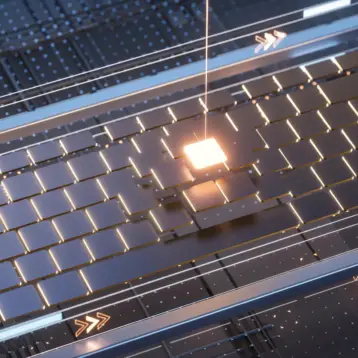

The Audiopad consists of a matrix of antenna elements that track the positions of electronically tagged objects on a tabletop surface. The Audiopad software translates this positioning information into musical and graphical feedback on the tabletop, where each object represents either a musical track or a microphone.

The performer can load his own audio samples and seamlessly combine them with the samples that are pre-installed on the device. Patten and Recht reject the use of a simple touch screen or tablet PC and say that the Audiopad was designed so one can interact with the device using multiple physical objects that could be moved. According to Patten and Recht, it is much easier to interact with physical objects than with touch screens because your hands receive passive tactile feedback from the objects, helping you to move them without requiring much visual attention.

TFOT has covered several multitouch technologies, including Microsoft’s innovative Surface Computing table the PICO two-way man-machine interactive technology (which is similar in many respects to the Audiopad but has different applications), the Interactive 3D Virtual Environment, which was also developed at MIT, and of course the original multi-touch technology developed by Jeff Han from NYU (now also from Perceptive Pixel).

More information about the Audiopad can be found on their website, where you can also watch a videos and see how the technology works.