|

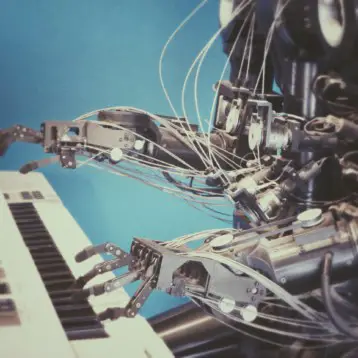

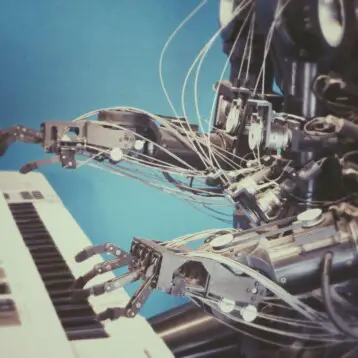

One of the problems experienced by robotic hands trying to mimic the motion of real human hands is the excessive processing time required to support a full twenty independent degrees of freedom. By limiting the independent degrees of freedom based on the real motion of hands, Professor Peter Allen of the Columbia Robotics Group hopes to simplify the real-time processing enough to overcome latency and other problems currently seen by other models without losing any functionality. By more accurately modeling real movements, this technology could also lead to better prosthetic hands permitting more natural motions and motions that integrate with the remaining biological signals more easily.

Allen and his Ph.D. student Matei Ciocarlie use a custom simulator called GraspIt! to create initial models of robotic hands and the algorithms needed to control them. The simulator not only accounts for allowed motions, but also considers force restrictions and wear on joints in its calculations. It also integrates directly with physical robotic models and sensors of various sorts allowing both virtual and initial physical tests to be run from the same controller.

The software uses a two step process to determine the best way to grasp an object. First it computes a variety of different possible grasping motions based on the angle and speed of the hand as it approaches the object to be grasped. Once a list of available possibilities is composed, the controller searches it for the series of motions that will provide the greatest stability and selects it. Once a single set of movements is selected, the controller instructs the robotic hand to follow the described pattern and grasp the object at hand.

Initial simulations and tests using four different models of mechanical hands successfully grasped a variety of objects including a wine glass, a model airplane, and a telephone.

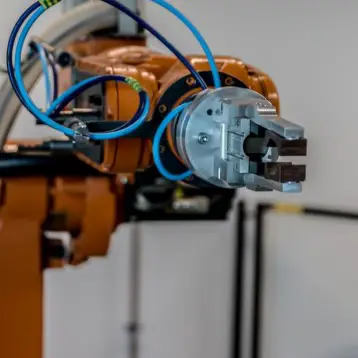

TFOT has previously reported on a robotic hand powered by compressed air developed by Virginia Tech. TFOT has also reported on other biomimetic robots including the RoboClam smart anchor based on the razor clam, a jumping robot inspired by grasshoppers, the GhostSwimmer swimming robot based on the bluefin tuna, a miniature spy plane inspired by bats, and an amphibious swimming and crawling robot based on a snake.

Read more about the new robotic hand controller and watch movies of it in operation at the Columbia Robotics Lab page devoted to grasping research. More detail about the GraspIt! simulator is available at Matei Ciocarlie’s GraspIt! page.